In industrial automation, the choice between discrete and analog sensors plays a pivotal role in determining the efficiency, accuracy, and reliability of systems across various industries. Both sensor types offer distinct advantages and applications tailored to specific industrial needs. In this post, I examine the differences between discrete and analog sensors, highlighting their applications and when to choose each in industries ranging from manufacturing to energy production.

Understanding discrete sensors

Discrete sensors provide binary outputs, signaling either on/off or numerical values within defined thresholds. They activate signal changes based on specific conditions. Proximity sensors, limit switches, and encoders are common examples of such sensors.

Applications of discrete sensors in various industries

Discrete sensors play integral roles across various industries due to their versatility and reliability. The following applications highlight the broad spectrum of uses, showcasing their importance in optimizing industrial processes and maintaining high performance and safety levels.

In manufacturing, discrete sensors are extensively used to detect presence on assembly lines. They ensure efficient production processes by accurately identifying the presence or absence of components.

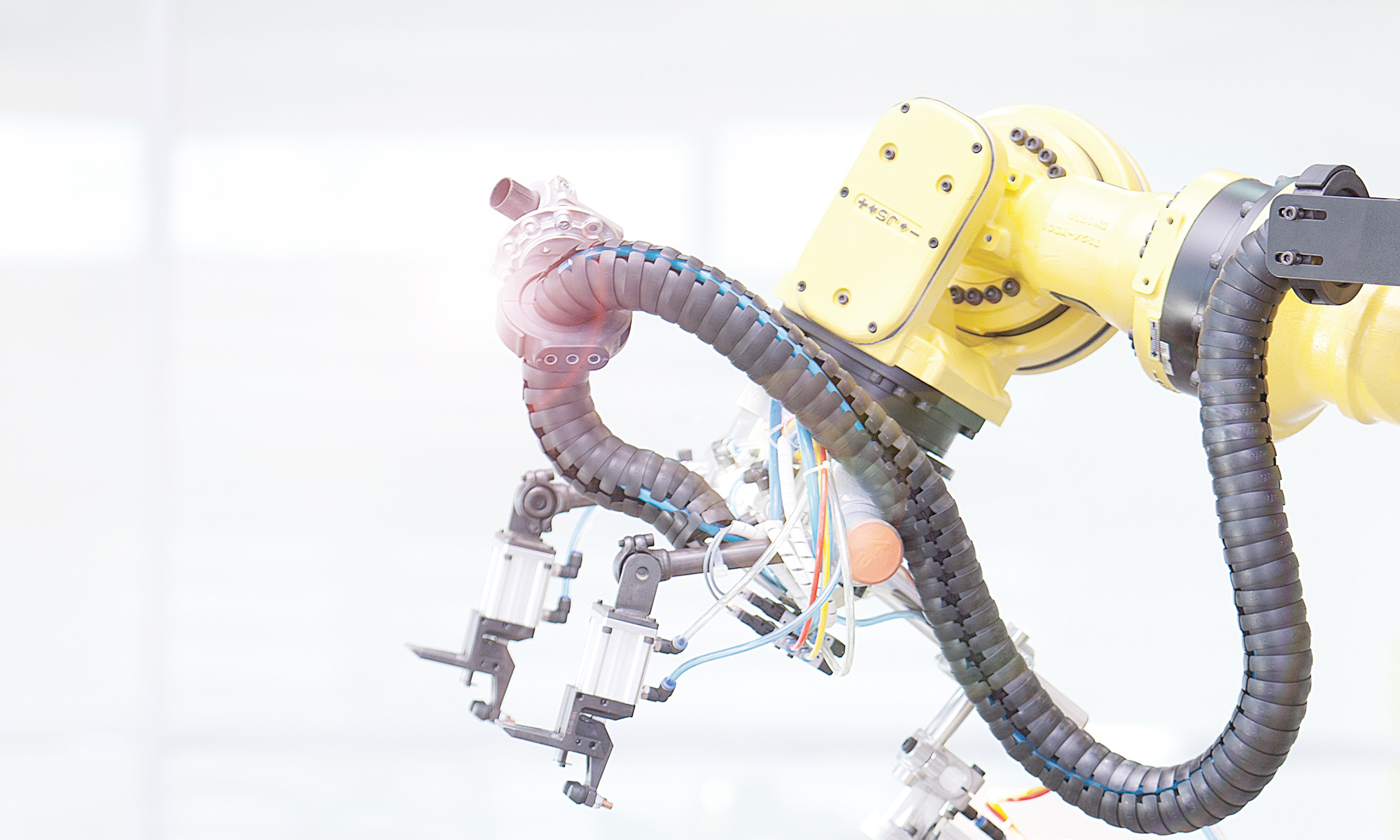

In the automotive industry, discrete sensors are crucial for position sensing. They precisely detect the positions of robotic arms and components in automated manufacturing processes.

Distinctive sensors are essential for fault detection in food processing plants. They quickly identify anomalies like overfilling or equipment malfunctions to maintain product quality and safety standards.

When to use discrete sensors

Discrete sensors excel in precision-critical applications. They suit binary output needs – when applications require simple on/off or discrete numerical outputs. With regard to high reliability, they offer robustness and dependability, making them well-suited for critical applications where accuracy and repeatability are paramount.

Understanding analog sensors

Analog sensors provide continuous outputs proportional to the measured quantity, offering a wide range of values rather than discrete states. They generate signals that vary in magnitude based on changes in the measured parameter. Temperature sensors, pressure transducers, and humidity sensors are examples of analog sensors.

Applications of analog sensors in various industries

Analog sensors are crucial in the oil and gas industry for process control, continuously monitoring parameters such as pressure and temperature in pipelines, and refining operations to ensure optimal performance and safety.

In chemical processing plants, analog sensors are vital in feedback control systems, providing real-time data for monitoring variables like pH levels and chemical concentrations. This data ensures precise process control and product quality assurance.

Analog sensors are also employed in renewable energy systems, such as solar and wind farms, for monitoring environmental conditions and optimizing the performance of energy generation equipment based on factors like sunlight intensity and wind speed.

When to use analog sensors

Offering precise data monitoring, analog sensors offer precise data monitoring and are suitable when applications require continuous monitoring of parameters with a wide range of values. They also offer high-resolution output signals, making them useful for applications where fine-grained data acquisition is necessary for process optimization or analysis.

Informed sensor selection for industrial innovation

Industrial automation professionals can make informed decisions to optimize system performance, efficiency, and reliability by understanding various industries’ unique features and applications of discrete and analog sensors. Whether enhancing manufacturing processes or ensuring safety in hazardous environments, choosing discrete and analog sensors is instrumental in driving innovation and progress across diverse industrial sectors.