Industrial image processing is essentially a requirement in modern manufacturing. Vision solutions can deliver visual quality control, identification and positioning. While vision systems have gotten easier to install and use, there isn’t a one-size-fits-all solution. Knowing how and when you should use optical filtering in a machine vision application is a vital part of making sure your system delivers everything you need.

So when should you use optical filtering in your machine vision applications? ALWAYS. Image filtering increases contrast, usable resolution, image quality and most importantly, it dramatically reduces ambient light interference, which is the number one reason a machine vision application doesn’t work as expected.

Different applications require different types of filtering. I’ve highlighted the most common.

Bandpass Filtering

Different light spectrums will enhance or de-emphasize certain aspects of the target you are inspecting. Therefore, the first thing you want to do is select the proper color/wavelength that will give you the best contrast for your application. For example, if you are using a red area light that transmits at 617nm (Figure 1), you will want to select a filter (Figure 3) to attach to the lens (Figure 2) that passes the frequency of the area light and filters out the rest of the color spectrum. This filter technique is called Bandpass filtering reference (Figure 4).

This allows only the light from the area light to pass through while all other light is filtered out. To further illustrate the kinds of effects that can be emphasized or de-emphasized we can look at the following images of the same product but with different filters.

Another example of Bandpass filtering can be seen in (Figure 9), which demonstrates the benefit of using a filter in an application to read the LOT code and best before sell date. A blue LED light source and a blue Bandpass filter make the information readable, whereas without the filter it isn’t.

Narrow Bandpass Filtering

Narrow bandpass filtering, shown in (Figure 10), is mostly used for laser line dimensional measurement applications, referenced in (Figure 11). This technique creates more ambient light immunity than normal Bandpass filtering. It also decreases the bandwidth of the image and creates a kind of black on white effect which is the desired outcome you want for this application.

Shortpass Filtering

Another optical filtering technique is shortpass filtering, shown in (Figure 12), which is commonly used in color camera imaging because it filters out UV and IR light sources to give you a true color image.

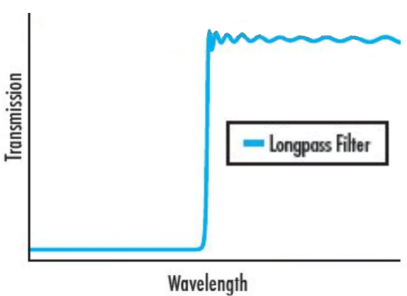

Longpass Filtering

Longpass filtering, referenced in (Figure 13), is often used in IR applications where you want to suppress the visible light spectrum.

Neutral Density Filtering

Neutral density filtering is regularly used in LED inspection. Without filtering, light coming from the LEDs completely saturates the image making it difficult, if not impossible, to do a proper inspection. Deploying neutral density filtering acts like sunglasses for your camera. In short, it reduces the amount of full spectrum light the camera sees.

Polarization Filtering

Polarization filtering is best to use when you have surfaces that are highly reflective or shiny. Polarization filtering can be deployed to reduce glare on your target. You can clearly see the benefits of this in (Figure 14).

are directly controlled. IO-Link I/O-Modules are automatically detected, configured and controlled.

are directly controlled. IO-Link I/O-Modules are automatically detected, configured and controlled. of a component is performed without PLC, the operator delivers the component, hits a trigger button, the SmartCamera checks for completeness of production quality, sends a report to a separate customer server, and controls directly via IO-Link interface the connected vision product.

of a component is performed without PLC, the operator delivers the component, hits a trigger button, the SmartCamera checks for completeness of production quality, sends a report to a separate customer server, and controls directly via IO-Link interface the connected vision product.

a vision system. Just like it sounds, the product is inspected for any irregularities or deviation from “perfect”.

a vision system. Just like it sounds, the product is inspected for any irregularities or deviation from “perfect”.