In my decade of experience supporting sales organizations as an application and product specialist across various industries, I’ve observed a common trend among customers: The majority tend to focus solely on vibration when it comes to condition monitoring for their assets. While vibration analysis is crucial for detecting mechanical component issues, it’s essential to recognize that it’s not the only method available. In this blog. I present a straightforward four-step process to guide maintenance technicians in implementing effective condition monitoring beyond vibration analysis.

In my decade of experience supporting sales organizations as an application and product specialist across various industries, I’ve observed a common trend among customers: The majority tend to focus solely on vibration when it comes to condition monitoring for their assets. While vibration analysis is crucial for detecting mechanical component issues, it’s essential to recognize that it’s not the only method available. In this blog. I present a straightforward four-step process to guide maintenance technicians in implementing effective condition monitoring beyond vibration analysis.

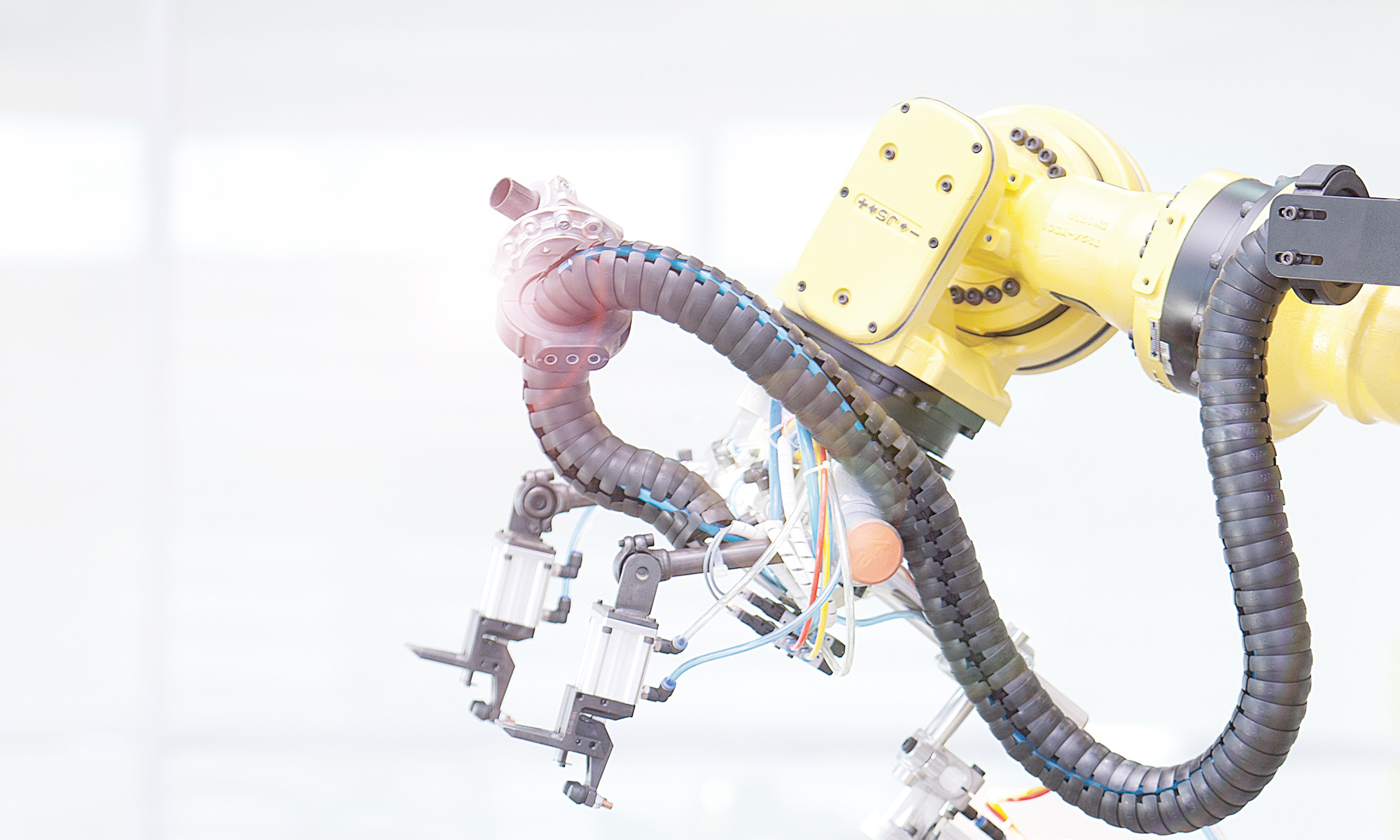

Step 1: Identify critical assets. Begin by finding the key assets or machines in your plant that can significantly affect unplanned downtime if they were to fail. Understanding the critical components if the first step in establishing an effective condition-monitoring strategy.

Step 2: Determine failure modes. Once you know the critical assets, figure out the potential failure modes of each machine. Knowing how a machine might fail enables you to choose the most relevant sensing technology for monitoring specific parameters contributing to potential failures.

Step 3: Choose sensing technology. Consider the failure modes you found in Step 2 and choose the appropriate sensing technology to monitor the parameters relevant to each machine. This step involves thinking beyond vibration analysis and exploring solutions that address diverse factors such as:

-

- Pressure

- Flow

- Level

- Position

- Load

- Temperature

- Humidity

- Viscosity

- Impurity/contamination

Step 4: Implement a monitoring solution. Before implementing the chosen solution across the entire plant, conduct a pilot test on one machine. This step allows you to evaluate the viability of the monitoring solution and ensures it aligns with the application needs. It’s a practical approach to minimize risks and optimize the effectiveness of your condition monitoring strategy.

Condition monitoring diversity

To emphasize the diversity of condition monitoring, consider scenarios where the vibration analysis falls short. For instance:

-

- Hydraulic Power Unit: Use a level sensor to monitor oil levels in the tank, preventing failures due to leaks.

- Air Compressor: Employ a pressure transducer and flow sensor to monitor air pressure and flow, addressing issues with a worn-out air pump.

- Electrical Cabinets: Protect critical electrical components by using ambient temperature and humidity sensors to detect elevated temperatures or moisture ingress.

In conclusion, break free from the confines of relying solely on vibration analysis. By following this four-step process, maintenance technicians can tailor condition monitoring strategies to the unique needs of their plants. Explore diverse sensing technologies, implement pilot solutions, and ensure you are monitoring your assets comprehensively to mitigate risks and optimize performance.